Robust Theme

Dec 09, 2019 2020-04-08 7:40Robust Theme

From Agile to AI: Same Transformation Mistakes Cost Millions

By: Kumar Dattatreyan

Your executive team just approved a major AI initiative. The consultants are hired. The pilot programs are launching. Everyone's excited about the productivity gains and competitive advantages ahead.

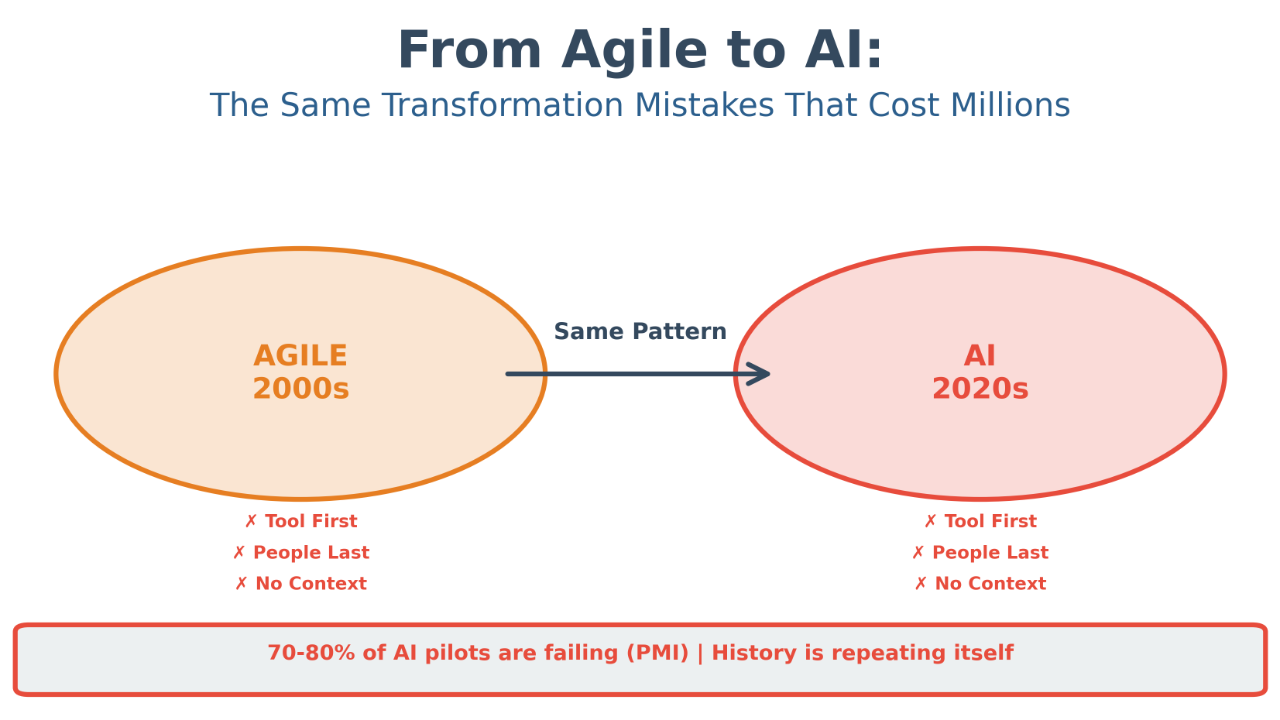

But here's the uncomfortable truth: if your organization struggled with Agile adoption over the past two decades, you're about to watch history repeat itself with AI. Same patterns. Same mistakes. Same expensive failures.

According to the Project Management Institute, 70-80% of AI pilots are currently failing. And if you've been paying attention, that number sounds eerily familiar. It's almost identical to the Agile transformation failure rates we saw in the 2000s and 2010s.

The lesson? Technology changes. Human behavior doesn't.

The Pattern We Refuse to See

Twenty years ago, organizations rushed to adopt Agile methodologies. They sent teams to two-day Scrum certifications, hired armies of coaches, and implemented frameworks like SAFe across entire enterprises. Billions of dollars later, most transformations delivered disappointing results.

Why? Because they approached Agile as a technology problem when it was actually a leadership and organizational problem.

Now we're doing it again with AI.

Sanjiv Augustine, founder and CEO of LitheSpeed and a 30-year transformation veteran, sees the pattern clearly. As someone who advised executives at Capital One, Nationwide Insurance, Samsung, and Walmart through their Agile journeys, he's watching organizations make the same mistakes with AI that they made with Agile.

"Adopting AI as just a tool or technique without larger consideration for the human beings involved, without consideration for the organization—doing it in a mindless way," Augustine explains. "We're bringing in something and using it to cut costs, to lay off developers. That's just not the way to do it."

The parallels are striking. In both cases, organizations:

- Led with tools and frameworks instead of people and purpose

- Ignored organizational context and culture

- Failed to secure genuine leadership commitment

- Treated transformation as a technical deployment rather than organizational change

- Measured success by adoption metrics rather than business outcomes

Here's what makes this even more frustrating: we should know better by now. Agile has been around for nearly 25 years. The lessons from those transformations are documented, studied, and readily available. Yet organizations are racing toward AI adoption like those lessons don't exist.

The Cost of Mindless Adoption

Let's talk about what "mindless adoption" actually looks like, because it's happening in boardrooms right now.

A Fortune 500 company brings in an AI tool to "optimize" customer service. The technology works beautifully in the demo. The cost savings projections look incredible on paper. Leadership approves the budget.

But nobody asked the customer service team how the work actually flows. Nobody considered how customers would react to AI-generated responses. Nobody thought about what happens to institutional knowledge when you eliminate half the team. Nobody built a business case beyond "AI will save us money."

Six months later, customer satisfaction is down. The remaining team is overwhelmed. The AI tool sits partially implemented because it doesn't fit the actual workflow. Millions spent. Little value delivered.

This isn't a hypothetical. This is the pattern playing out across industries right now.

Augustine points to a sobering statistic: only about 250,000 people worldwide are actually developing AI tools and models at the operating system level. Those quarter-million developers are creating technologies that will affect seven billion people. And most of them have no idea how their tools will actually be used in organizational contexts.

Meanwhile, the executives approving AI initiatives often have even less understanding of the technology's capabilities, limitations, and organizational implications.

The gap between AI's potential and organizations' ability to harness it responsibly isn't just concerning—it's dangerous. As Augustine notes, "AI is an existential threat because it's a powerful technology. Whether it becomes dangerous or beneficial will be decided by human beings."

Why Smart Organizations Learn from Agile's Lessons

Not every Agile transformation failed. Some organizations—Nationwide Insurance, Capital One before their strategic pivot, and others—built genuine agility into their operating models. These success stories share common characteristics that apply directly to AI adoption.

First, they treated transformation as an organizational capability, not a project. At Nationwide, you can walk from team to team and see iteration planning meetings, Jira boards supporting the mechanics, and what they now call "Agile Delivery Leads" (the evolved role of Scrum Masters). They layered lean portfolio management on top of team-level practices and scaled it enterprise-wide.

But notice what came first: they didn't lead with the framework. They built the foundation of iterative delivery and incremental improvement into how leaders thought about work. The framework supported the thinking, not the other way around.

Second, successful transformations subordinated technology to two things: business purpose and human beings in the organization. Every tool, every process, every metric had to answer two questions: "Does this serve our customers?" and "Does this respect and empower our people?"

Third, they approached adoption incrementally and learned constantly. They didn't try to transform the enterprise in one massive push. They ran experiments. They validated what worked. They adjusted based on feedback. They built capability gradually.

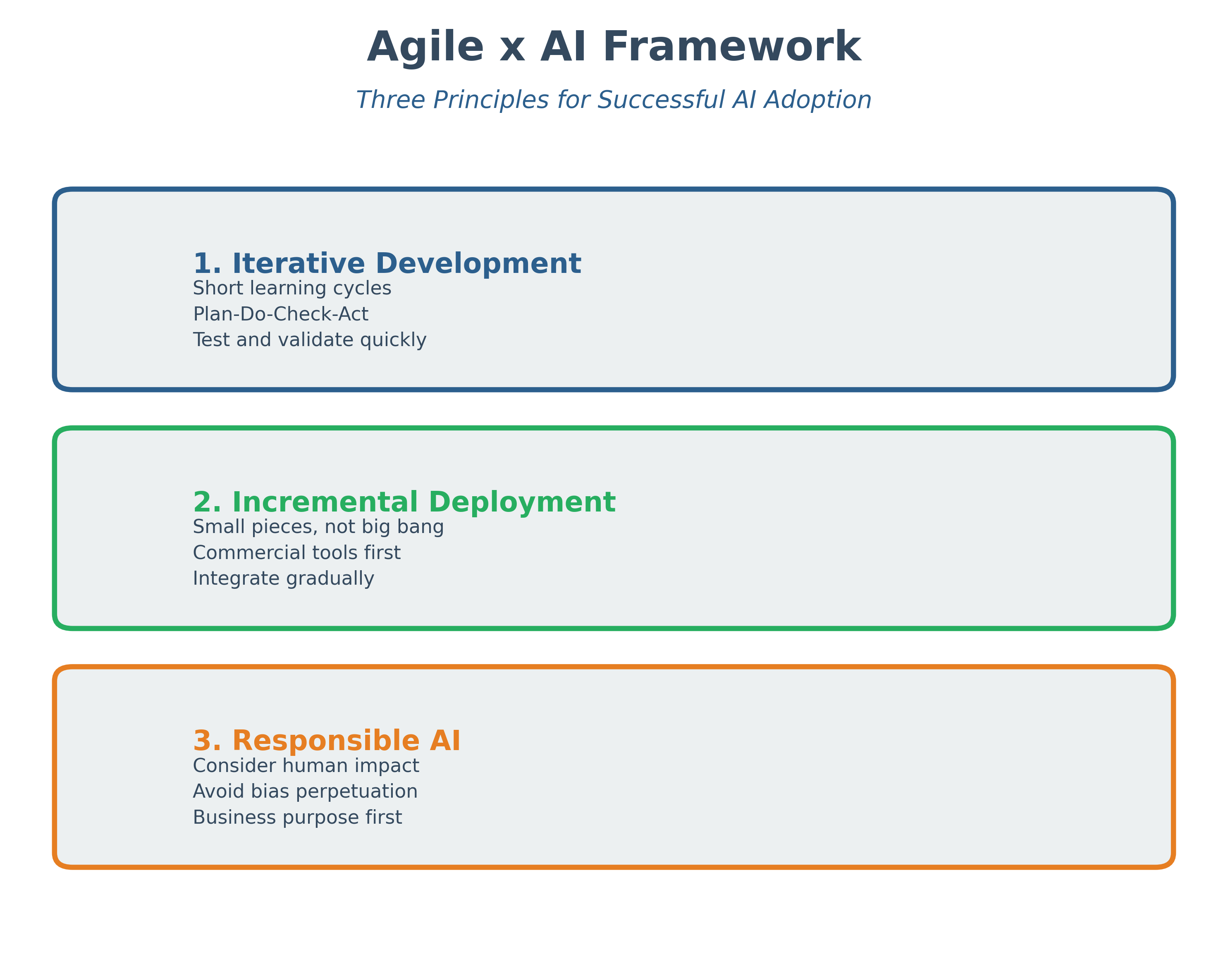

These same principles apply to AI adoption. In fact, Augustine and his team at LitheSpeed have codified this approach as "Agile x AI"—using proven Agile principles to guide AI transformation.

The Agile x AI Framework: Three Principles That Work

If you already have an Agile foundation in your organization, you possess something valuable: a proven approach to iterative learning and incremental change. You can leverage that foundation for AI adoption.

1. Iterative Development: Short Learning Cycles

Instead of deploying AI in one big implementation, use the Plan-Do-Check-Act cycle. Run small experiments. Test hypotheses. Learn quickly what works and what doesn't. Validate your assumptions with real users before scaling.

One organization Augustine works with is integrating a commercially available AI tool into customer service workflows. They're not rebuilding their entire customer service operation. They're testing one integration point, measuring impact, learning from the results, then deciding on the next iteration.

This approach treats failure as learning, not catastrophe. When a small experiment doesn't work, you've gained valuable information at low cost. When a massive deployment fails, you've wasted millions and damaged trust.

2. Incremental Deployment: Break It Down

Don't try to AI-enable your entire operation at once. Identify one process, one workflow, one use case where AI could add clear value. Implement there. Learn. Refine. Then move to the next opportunity.

Start with commercially available products integrated into existing business processes. You don't need to build everything custom. You need to understand how AI tools work in your specific context before you invest in bespoke solutions.

This incremental approach also manages risk. If one AI integration doesn't deliver value, it doesn't derail your entire transformation. You learn, adjust, and try something different.

3. Responsible AI: People and Purpose First

This is where many AI adoptions go catastrophically wrong. The technology becomes the goal instead of the means.

Responsible AI means:

- Understanding the human impact before deployment

- Checking for bias in your learning models

- Ensuring AI serves your business purpose, not just reducing costs

- Maintaining human judgment in critical decisions

- Building trust through transparency about how AI is used

As Augustine emphasizes, "If we don't subjugate AI to two things—our business purpose and the human beings in the organization—we will fail, if not today, then tomorrow."

This isn't just ethical practice. It's business necessity. AI tools that undermine trust, perpetuate bias, or dehumanize customer interactions will destroy value faster than they create it.

The Leadership Transformation Nobody Talks About

Here's the part that makes executives uncomfortable: successful AI adoption requires you to transform before your organization does.

You cannot lead an organization working in new ways unless you first understand those new ways yourself. You cannot make sound decisions about AI tools if you don't understand their capabilities and limitations. You cannot subordinate AI to human needs if you see it primarily as a cost-reduction lever.

This mirrors the Agile lesson many organizations learned too late: you cannot mandate agility from the top while operating in completely different ways yourself. The disconnect destroys credibility and undermines the entire transformation.

Augustine puts it bluntly: "If leaders fail, organizations will fail. A successful transition to this new way of working starts with the leader."

What does this mean practically?

It means using AI tools yourself. Not just hearing about them in presentations—actually using ChatGPT, Claude, or other LLMs in your daily work. Understanding their strengths and weaknesses through direct experience.

It means learning enough about AI to ask intelligent questions. You don't need to become a data scientist, but you need to understand the difference between machine learning and agentic AI. You need to know what questions to ask when someone proposes an AI solution.

It means being willing to admit uncertainty. The executives who say "I don't really understand AI, but it seems important" are more trustworthy than those who pretend expertise they don't have.

And it means staying on the leading edge not because you love technology, but because you understand the existential risk of falling behind. As Augustine notes, leaders need to be "inside the tent looking out, not outside the tent looking in."

Three Actions You Can Take This Week

The gap between reading about transformation and actually transforming is filled with action. Here are three specific steps you can take immediately:

1. Audit Your Current AI Initiatives for Mindless Adoption Warning Signs

Look at every AI pilot, proof of concept, or implementation currently underway. Ask:

- Did we define the business purpose before selecting the tool?

- Have we involved the people who will actually use this AI in the design?

- Do we understand the human impact (both positive and negative)?

- Are we measuring success by business outcomes or just adoption rates?

- Is this solving a real problem or chasing a trend?

If you can't answer these questions clearly, you have a mindless adoption problem.

2. Apply Agile x AI Principles to Your Highest-Risk Initiative

Pick the AI initiative with the most potential downside risk. Apply the three-part framework:

- Break the deployment into smaller iterations with clear learning objectives

- Create incremental rollout phases rather than big-bang implementation

- Assess responsible AI implications at each phase

Treat this as your pilot for a better approach. Learn what works. Document it. Scale it to other initiatives.

3. Start Your Personal AI Learning Journey

Block one hour this week to actually use an AI tool for real work. Not a demo. Not a presentation. Real work.

Use ChatGPT to analyze a problem you're facing. Ask Claude to critique a strategy document. Use an AI tool to automate something tedious in your workflow.

Pay attention to what works, what doesn't, where you need to add judgment, where the AI adds value, where it hallucinates or makes mistakes.

You cannot lead what you don't understand. Understanding starts with direct experience.

The Choice That's Not Really a Choice

Some leaders treat AI adoption as optional. They're wrong.

AI will transform how work gets done. The only question is whether your organization will lead that transformation intelligently or stumble through it mindlessly.

The Agile era taught us that methodology matters less than mindset. That frameworks fail without foundational thinking. That tools without wisdom create expensive messes.

The AI era is teaching us the same lessons. The organizations that learned from Agile's failures have a significant advantage. They've built muscles for iterative learning, incremental improvement, and human-centered technology adoption.

But those advantages only matter if you use them.

Twenty years from now, we'll look back at this moment. Some organizations will have built genuine AI capability that serves customers and empowers people. Others will have burned through budgets on failed initiatives and created organizational cynicism that blocks future innovation.

The difference won't be the technology they chose. It will be the wisdom with which they adopted it.

History doesn't repeat itself. But it rhymes. And right now, the Agile transformation song is playing again with AI lyrics.

Will you hear the melody this time? Or will you wait until the expensive encore?

Ready to assess your organization's AI transformation readiness? Take our AI Adoption Assessment to identify gaps between where you are and where you need to be before scaling AI initiatives.

Want to explore these concepts in more depth? Listen to related episodes from The Meridian Point:

- Episode 152: From Agile to AI - Avoiding the Same Transformation Mistakes

- Episode 147: How AI is Disrupting Leadership Communication & Executive Coaching

- Episode 123: Behavioral DNA - How AI is Revolutionizing Business

- View Full Playlist: The Meridian Point